These are my notes for where I can see SPU varying from ia32, as presented in the video Part 7 — Working with Strings.

The ia32 instructions covered in this video (MOVSx, LODSx, STOSx, CMPSx, CLD, STD) clearly highlight many of the differences between that arch and SPU:

- Implied operand registers e.g. MOVSx using %esi and %edi as source and destination addresses.

- Side effects on operands e.g. incrementing addresses while performing reads/writes

- Side effects in the FLAGS register e.g. direction flag, zero flag

- Support for any alignment of data.

I’ve made less effort to re-implement the full functionality of the ia32 instructions for this part. There’s a couple of cases that might be interesting to attempt to do so, but a fully general case can probably just be lifted from newlib’s memcpy() implementation for SPU.

Again, this is the quick SPU instruction reference I use, and I still regularly refer to the SPU ISA doc.

Working with Strings

Starting with StringBasics.s, firth there’s some storage:

1 .data

2 .align 4

3 HelloWorldString:

4 .asciz "Hello World of Assembly!"

5 .align 4

6 H3110:

7 .asciz "H3110"

8 .align 4

9 shuf_AABABCDghijklmnop:

10 .int 0x00000100,0x01020317,0x18191a1b,0x1c1d1e1f

11 #.bss

12 .comm Destination, 100, 16

13 .comm DestinationUsingRep, 100, 16

14 .comm DestinationUsingStos, 100, 16

Notable changes from the original:

- Added .align 4 before each label to provide 16 byte alignment

- There’s a shuffle pattern added that I’ll write more about later

- .bss is commented out because spu-as doesn’t like it (I’m still not clear on why this is the case)

- spu-as doesn’t support alignment for .lcomm but does for .comm (why?), so .lcomm has been replaced with .comm and the trailing “, 16” added to each case to provide 16 byte alignment.

(learned: .align doesn’t have an effect on .comm/.lcomm, and .comm’s alignment isn’t power of 2, à la .align — there’s nothing hard about assembly programming, really :\)

1. Simple copying using movsb, movsw, movsl

26 #movl $HelloWorldString, %esi

27 #movl $Destination, %edi

28

29 ila $5,HelloWorldString

30 ila $6,Destination

31

32 #reverse order of instructions to avoid even sillier alignment hassle

33 #movsw

34 lqd $7,0($5)

35 lqd $8,0($6)

36 rotqby $10,$7,$9

37 cwd $11,0($6)

38 shufb $12,$10,$8,$11

39 stqd $12,0($6)

40 ai $5,$5,4

41 ai $6,$6,4

42

43 #movsl

44 lqd $7,0($5)

45 lqd $8,0($6)

46 ai $9,$5,-2 # rotate needed to get val into pref slot

47 rotqby $10,$7,$9

48 chd $11,0($6)

49 shufb $12,$10,$8,$11 # shuffle byte into dest

50 stqd $12,0($6)

51 ai $5,$5,2

52 ai $6,$6,2

53

54 #movsb

55 lqd $7,0($5)

56 lqd $8,0($6)

57 ai $9,$5,-3 # rotate needed to get val into pref slot

58 rotqby $10,$7,$9

59 cbd $11,0($6)

60 shufb $12,$10,$8,$11 # shuffle byte into dest

61 stqd $12,0($6)

62 ai $5,$5,1

63 ai $6,$6,1

Of the examples in this part, this is my biggest attempt at a “complete” implementation of the ia32 instructions, and even then it’s built on the assumption of natural alignment (of words and halfwords) not present in the ia32 code. Lots of effort to achieve some simple tasks.

Making the extra assumption that the alignment of source and destination match, the three MOVS instructions can be combined and simplified to something like:

66 lqa $13,HelloWorldString

67 lqa $14,Destination

68 fsmbi $15,0x01ff

69 selb $16,$13,$14,$15 # copy only desired bytes into destination vector

70 stqa $14,Destination

With the further assumption that the destination was able to be trashed entirely, this could be reduced to just a load and a store.

2. Setting / clearing the DF flag

There is no DF flag. It could be simulated through the use of an offset stored in another register that is added to addresses using lqx & stqx instructions, which would achieve the same kind of functionality.

3. Using Rep

REP is a fascinating little instruction (modifier? Appears to be a single byte in disassembly), but there’s no direct equivalent for the SPU. It could be mimicked in a general way on SPU using branches, but branches are a topic of later parts in the series so I’ll avoid that for now.

Instead, because the length and alignments of source and destination are known, it can be (effectively) unrolled and branchless :)

85 #movl $HelloWorldString, %esi

86 #movl $DestinationUsingRep, %edi

87 #movl $25, %ecx # set the string length in ECX

88 #cld # clear the DF

89 #rep movsb

91

95 lqa $20,HelloWorldString

96 lqa $21,HelloWorldString+16

97 lqa $22,DestinationUsingRep+16

98 fsmbi $23,0x007f

99 selb $22,$21,$22,$23

100 stqa $20,DestinationUsingRep

101 stqa $22,DestinationUsingRep+16

A simple load and store for the first quadword, and a merge of the second with its destination. Again, making further assumptions about the destination memory would remove the need for lines 97–99.

4. Loading string from memory into EAX register

106 #leal HelloWorldString, %esi

107 #lodsb

112 ila $24,HelloWorldString

113 lqa $25,HelloWorldString

114 ai $26,$24,-3

115 rotqby $27,$25,$26

116

117 #dec %esi

118 #lodsw

120 ai $28,$24,-2

121 rotqby $29,$25,$28

122

125 #subl $2, %esi # Make ESI point back to the original string

126 #lodsl

128 rotqby $30,$25,$24

Similar to 1. — loading data and rotating into the preferred slot of a register. Assumptions about source offset and that the loaded data doesn’t span a quadword boundary makes this much simpler than it would otherwise be.

5. Storing strings from EAX to memory

8 .align 4

9 shuf_AABABCDghijklmnop:

10 .int 0x00000100,0x01020317,0x18191a1b,0x1c1d1e1f

133 #leal DestinationUsingStos, %edi

134 #stosb

135 #stosw

136 #stosl

137

141 lqa $31,DestinationUsingStos

142 lqa $32,shuf_AABABCDghijklmnop

143 shufb $33,$30,$31,$32

144 stqa $33,DestinationUsingStos

Rather than more repetitive merging and messing about, I chose to combine the three stores and get the same effect from a single shuffle — which includes merging with the contents of the destination quadword.

(The shuffle name reveals the intended function: capital letters refer to bytes from the first register, lower-case from the second. I picked up this scheme from Insomniac Games’s R&D pages.)

6. Comparing strings

149 #cld

150 #leal HelloWorldString, %esi

151 #leal H3110, %edi

152 #cmpsb

153

154 lqa $34,HelloWorldString

155 lqa $35,H3110

156 ceqb $36,$34,$35

There’s no byte-subtraction instruction for SPU, but ceqb will compare the bytes in two registers for equality. That should be enough to work out if two strings match, but doesn’t give the kind of ordering that you’ll get from strcmp(). Getting the result from ceqb and making some kind of use of it may require shifts, rotates or other shenanigans.

Jaymin Kessler has a post on scanning through pixel values that is also relevant for a number of string manipulation problems.

Previous assembly primer notes…

Part 1 — System Organization — PPC — SPU

Part 2 — Memory Organisation — SPU

Part 3 — GDB Usage Primer — PPC & SPU

Part 4 — Hello World — PPC — SPU

Part 5 — Data Types — PPC & SPU

Part 6 — Moving Data — PPC — SPU

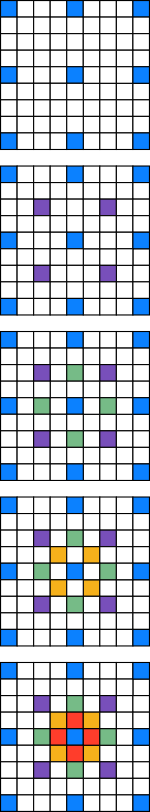

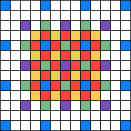

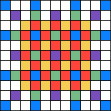

(There’s a smaller example pictured, again with a different colour for each iteration of the calculation)

(There’s a smaller example pictured, again with a different colour for each iteration of the calculation) bytes per tile. 171,680 bytes is a big chunk of local store, but it’s probably better to be using it for something…

bytes per tile. 171,680 bytes is a big chunk of local store, but it’s probably better to be using it for something…