Randomness is nice, except when you have too much.

It’s easy to blast random pixels all over the screen, but it’s much nicer when there’s some continuity in the randomness.

For diamond-square interpolation, I was looking for a way to generate (and use) random seed values to produce continuous-looking results within and between calculated tiles, as well as varying these seed values over time in a somewhat appealing fashion.

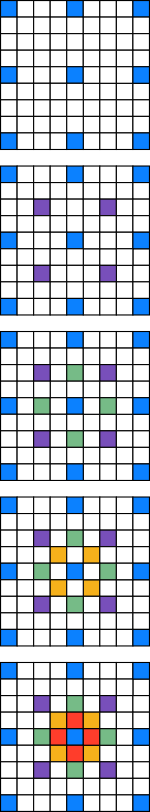

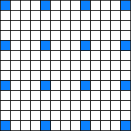

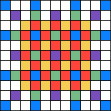

To calculate the values for a given tile requires the corner values of that tile along with the corner values of the eight surrounding tiles — that is, all the blue pixels in the picture are needed to calculate the pixels required to calculate the pixels in the centre tile.

The corner values from one tile effects the calculation of the tiles adjacent. For this reason, the corner values of each tile are state that must remain constant for the entire frame.

To render a full 1920×1080 frame, 15×9 tiles are required, with 16×10 corner values. To calculate the tiles around the frame border, further un-rendered tile data is required to correctly seed each of these border tiles. The result of this is that 18×12 random pixel values are needed to define the full set of tiles for a 1080p frame, which means the seeds for the tiles of a frame can be stored in 18x12x4 = 864 bytes. Not unreasonable.

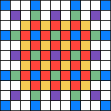

When rendering each tile, these seed values are first placed in the appropriate 16 locations (taking into account the compressed outer regions), and the interpolation takes place. (Interpolation is performed on the seed values and, later, using only values calculated during a previous iteration — which means there’s no requirement to zero memory between tiles)

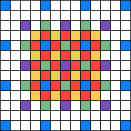

The result with just this looks quite reasonable (imho) — there’s decent interpolation of random values across the screen. These random seed values can also be permuted without too much difficulty between frames — I’ve tried some trig-function based methods to give a sense of continuous, directional changes. It works but I’m sure there’s better options.

As for the random perturbing of interpolated values, I’ve tried a few methods and not achieved anything that I’ve been all that happy with. The problem I’ve had is finding/generating a suitably random value of appropriate scale, and applying it to the pixel value fast enough.

(As I reach the end of this, I have the impression that what I’ve put together is almost, but not entirely unlike, Perlin noise — you could possibly say that it has a really clumsy interpolation function. I have a much better understanding of Perlin noise than when I began, and it really looks like I should have tried that. Learning is Good.)

At some stage in the not too distant future, I hope to perform some screencaps and post some actual images and maybe even a video. And fwiw I’ll post the source code, too.

(There’s a smaller example pictured, again with a different colour for each iteration of the calculation)

(There’s a smaller example pictured, again with a different colour for each iteration of the calculation) bytes per tile. 171,680 bytes is a big chunk of local store, but it’s probably better to be using it for something…

bytes per tile. 171,680 bytes is a big chunk of local store, but it’s probably better to be using it for something…