warning C4146: unary minus operator applied to unsigned type, result still unsigned

I saw this warning recently.

“Aha!” I thought. “A common source of errors, able to strike down the unsuspecting programmer. Thank you crafters of Visual C++ compiler warnings, tirelessly laboring to uncover wrong assumptions and naively written code.”

“What?” I exclaimed. “Of course the result is still unsigned. That’s how the language is designed, and that’s what I wanted!”

Nevertheless, I read the documentation for the warning to see if there was anything I could glean from it — particularly to see if I could find sufficient reason to not just #pragma disable it.

This is what you can find in the documentation:

Unsigned types can hold only non-negative values, so unary minus (negation) does not usually make sense when applied to an unsigned type. Both the operand and the result are non-negative.

Negation of an unsigned value may not make sense if you don’t know what it means — it is well defined. Regardless, this is a level 2 warning. It is designed to catch common mistakes and misunderstandings and notify the programmer to have them look more closely. It may be an entirely reasonable thing to warn about.

The documentation continues with some rationale:

Practically, this occurs when the programmer is trying to express the minimum integer value, which is -2147483648. This value cannot be written as -2147483648 because the expression is processed in two stages:

- The number 2147483648 is evaluated. Because it is greater than the maximum integer value of 2147483647, the type of 2147483648 is not int, but unsigned int.

- Unary minus is applied to the value, with an unsigned result, which also happens to be 2147483648.

The first point is wrong. Wrong for a standards-conformant C++ implementation, anyway. The second would be accurate if the first was accurate (because 232 – 231 == 231)

Here’s what the most recent draft of the C++ standard says about the integer literal types:

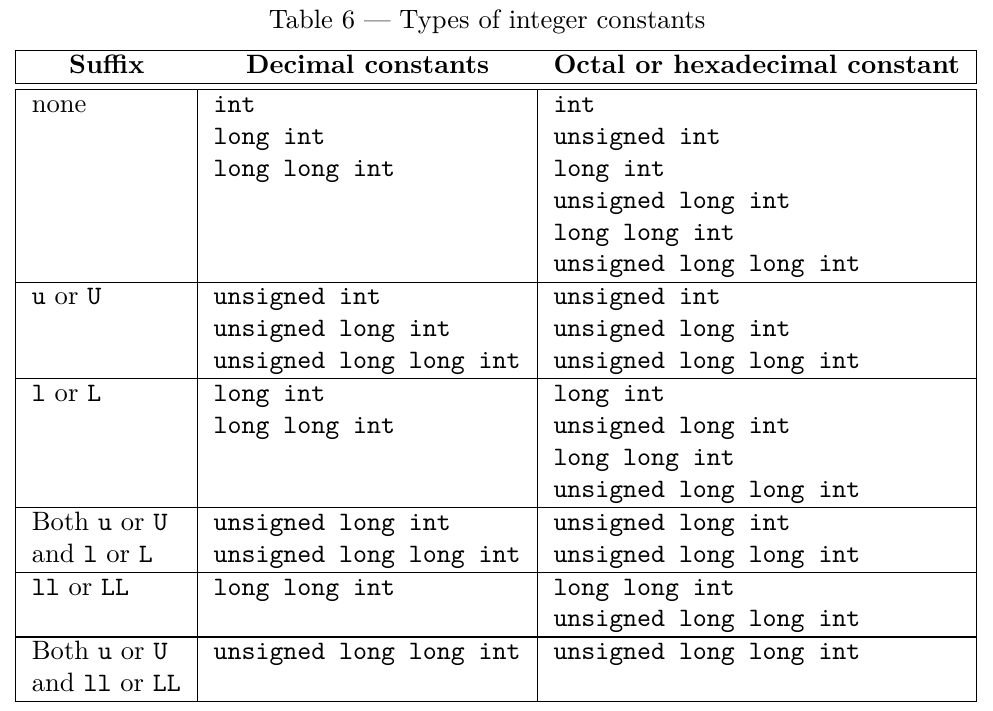

The type of an integer literal is the first of the corresponding list in Table 6 in which its value can be represented.

2147483648 is a decimal constant with no suffix. When using VC++ with it’s 32 bit long int type, the first of the corresponding list in which its value can be represented is the 64 bit long long int. An unsigned type is never an option.

Unary minus should then be applied to long long int 2147483648, which should result in long long int -2147483648. There’s nothing unsigned in this process

Use of the result should behave in an unsurprising way, too — long long int -2147483648 can be assigned to a variable of type int and nothing unexpected will happen. The type can be converted without affecting the value.

According to the standard, the rationale is flawed, and the warning seems pointless to me.

In theory, there’s no difference between theory and practise

So I tried compiling the example program from the documentation to see what would happen.

// C4146.cpp

// compile with: /W2

#include <stdio.h>

void check(int i)

{

if (i > -2147483648) // C4146

printf_s("%d is greater than the most negative int\n", i);

}

int main()

{

check(-100);

check(1);

}

The documentation predicts the following outcome:

The expected second line, 1 is greater than the most negative int, is not printed because ((unsigned int)1) > 2147483648 is false.

If I build the program with gcc 4.9.2, both lines print.

If I build the program with Visual C++ 2012, or even 2015 Preview, only one line is printed (as was predicted).

So there is legitimacy to this warning — this is an area that Visual C++ is not compliant with the standard.

Maybe it’s because the standard has changed? I looked at the earliest version of the text available in the cplusplus github repo dating from late 2011, and that has the same rules as quoted above.

I went back further and found copies of the standard from 2003 and 1998, both of which state:

The type of an integer literal depends on its form, value, and suffix. If it is decimal and has no suffix, it has the first of these types in which its value can be represented: int, long int; if the value cannot be represented as a long int, the behavior is undefined.

So it’s a detail that was previously undefined, which means that the compiler is permitted to do whatever it wants. In this case, we’ll get a warning, but only if the programmer has asked for it using option /W2.

The documentation is accurate, and Visual C++ hasn’t kept up with changes in the standard. This shouldn’t be surprising.

Update: long long int was added to the standard as part of C++11. It appears that VC++ has had long long support since at least Visual Studio .NET 2003

So what?

This investigation arose from my reading of Visual C++ documentation in the context of what I knew of a recent draft of the C++ standard. It turns out that these two things are less connected than I had assumed. Unsurprisingly, the Visual C++ documentation describes Visual C++, not the standard.

While it would be nice if deviations from the standard were clearly marked in the documentation, and even nicer if the Visual C++ compiler was consistent with the ISO standard, the reality is that they are not and it is not.

One should always pay close attention to context, which happens to apply as much when reading about the C++ language as it does when writing C++ code.